Faceware Software Products

REALTIME SOFTWARE AND PLUG-INS

Faceware Studio

Faceware Studio is our brand new high-quality, realtime animation software that tracks facial movement from live or recorded video. It uses state-of-the-art machine learning and deep learning techniques to track your face and create animation in realtime.

The realtime facial animation platform

Key Features

TRACK ANY FACE

With single-click calibration, Studio tracks and animates any facial performance. Our neural network technology easily recognizes your face whether from a live camera or from pre-recorded media. Interactive events, virtual production, and large scale content creation are all made possible with Studio.

ADVANCED TUNING

Easily visualize and adjust your actor-specific profile with Animation Tuning. Everyone’s face is different; Studio provides the feedback and tools you need to tailor your animation to your actor’s unique performance.

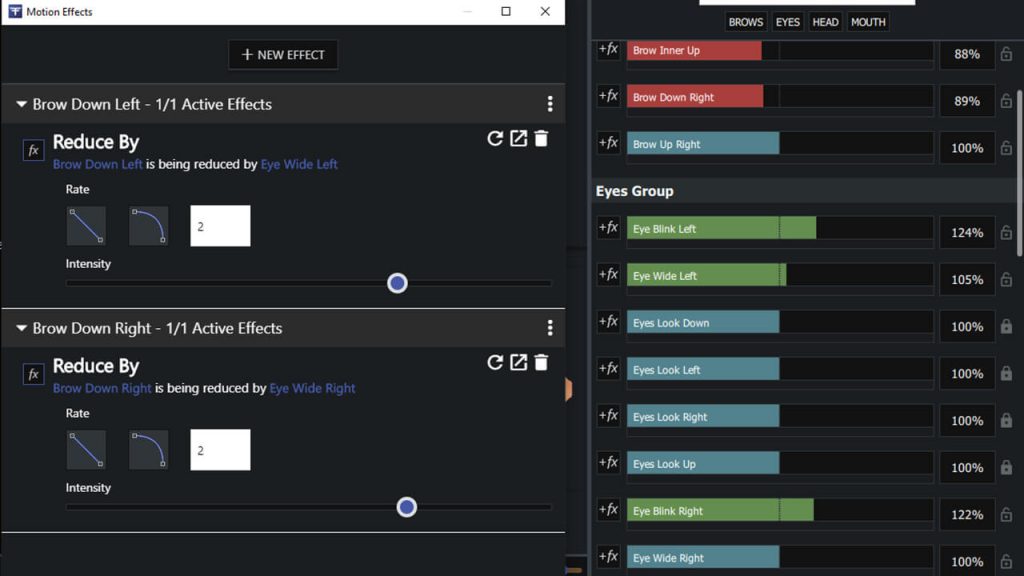

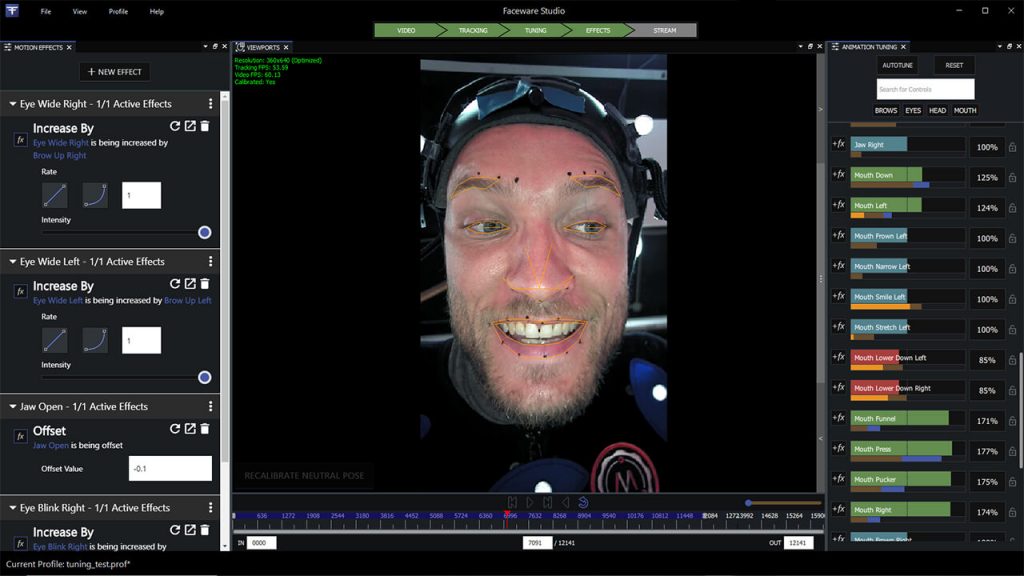

MOTION EFFECTS

Motion Effects is a powerful system for building additional logic into your realtime data stream. Effects are used to manipulate your data to perform exactly the way you want it to, giving you unparalleled and direct control over your final animation.

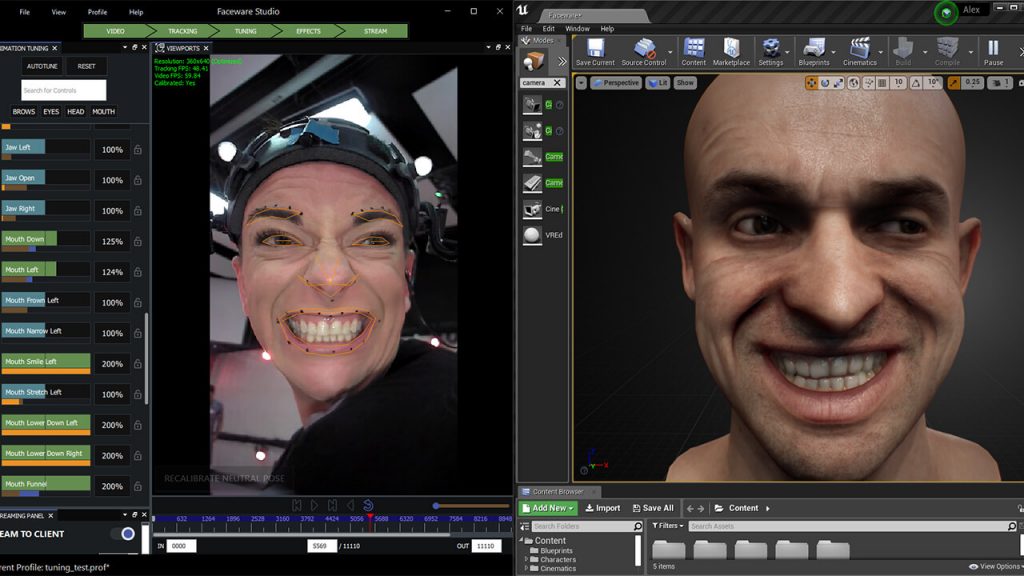

ANIMATE ANY CHARACTER

Studio gives you the freedom to animate any character rig. Easily stream facial animation data to our Faceware-supported plugins in Unreal Engine, Unity, Motionbuilder, (and soon to Maya), by mapping Studio’s standard set of controls to your custom avatar.

Realtime Workflow

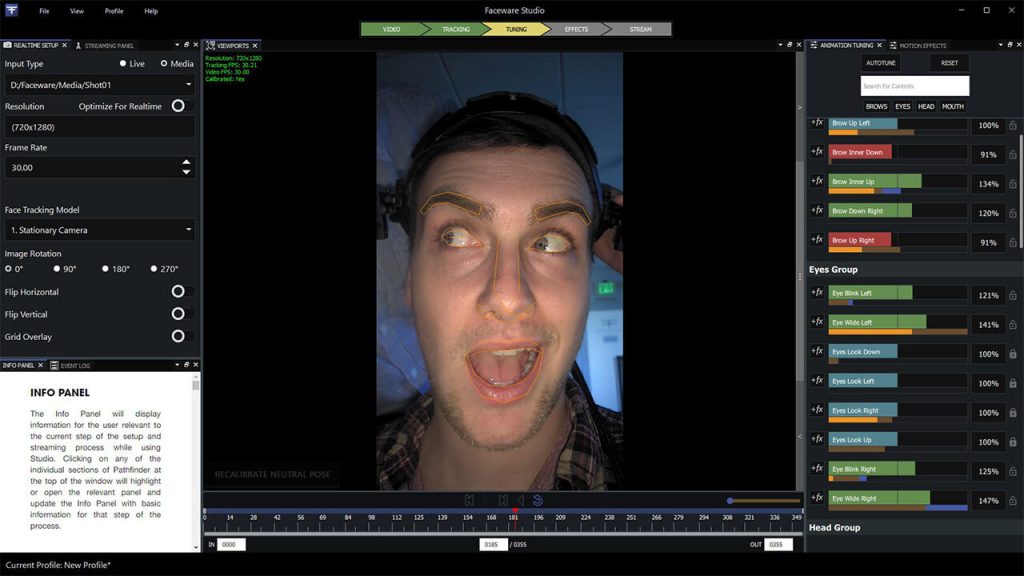

REALTIME SETUP

Use the Realtime Setup panel to select the camera, image sequence, or pre-recorded video that you want to track. Then, use the controls in the panel to adjust various options like frame rate, resolution, and rotation. Depending on your input, select the Stationary or Professional Headcam model. The Pathfinder feature will alert you whether your realtime setup is optimal.

CALIBRATION & TRACKING

With your realtime setup complete, the Tracking Viewport will begin displaying your media. In order to calibrate your tracking, your actor should be properly framed, focused, and in a neutral pose. If using pre-recorded media, use the media playback controls to find an ideal neutral pose. Then, use the Calibrate Neutral Pose button to begin tracking.

TUNE YOUR ANIMATION

The Animation Tuning panel contains a list of each shape and its corresponding value as your face is being tracked. The moving, colored bars give you instant feedback about each shape. Click and drag the slider to increase or decrease the influence of each shape. In the Motion Effects panel, use the included effects to enhance your data or use Python to create your own custom effects on a per control basis.

STREAM TO ENGINE

The Streaming panel gives you a myriad of options to enable and customize your data stream. To stream your animation data from Faceware Studio, configure the Faceware-supported plugin in your engine and then click ‘Stream to Client’ to see animation on your character.

For Unreal Engine

Make something Unreal!

Unreal Engine is the world’s most open and advanced real-time 3D creation tool. Continuously evolving to serve not only its original purpose as a state-of-the-art game engine, today it gives creators across industries the freedom and control to deliver cutting-edge content, interactive experiences, and immersive virtual worlds.

Faceware Studio connects to Unreal Engine through the Live Client plugin (developed by Glassbox). Unreal is an ideal choice for things like virtual production, pre-visualization, and projects that require high-quality character rendering and provides an intuitive toolset for connecting the animation data from Studio to your character.

For Unity

Create a world with more play!

Unity is the world’s leading real-time 3D development platform, providing the tools to create amazing games and publish them to the widest range of devices. The Unity core platform enables entire creative teams to be more productive together.

Faceware Studio connects to Unity through a free plugin called Live Client for Unity that we’ve developed that makes it easy for you to map and record the animation data from Studio to your character in the engine.

Autodesk MotionBuilder

3D character animation software

Capture, edit, and play back complex character animation with MotionBuilder® 3D character animation software. Work in an interactive environment that’s optimized for both animators and directors. Create realistic movement for your characters with one of the industry’s fastest animation tools.

Faceware Studio connects to MotionBuilder through a free plugin called Live Client for MotionBuilder, available for free through your Faceware User Portal. MotionBuilder is a common and ideal choice for traditional motion capture pipelines looking to record facial animation data being streamed from Studio.

(official website : Faceware Technologies, Inc. – Studio)

CREATION SUITE SOFTWARE

Analyzer

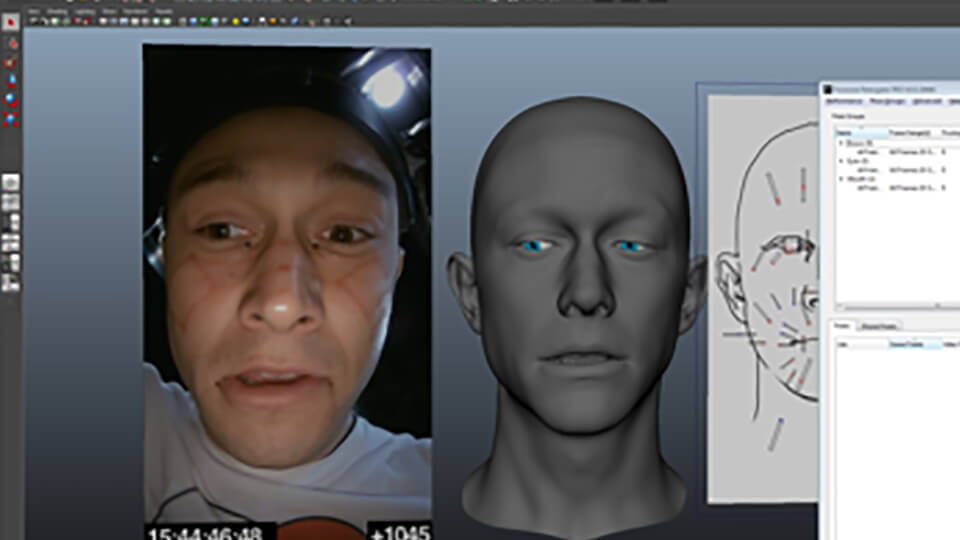

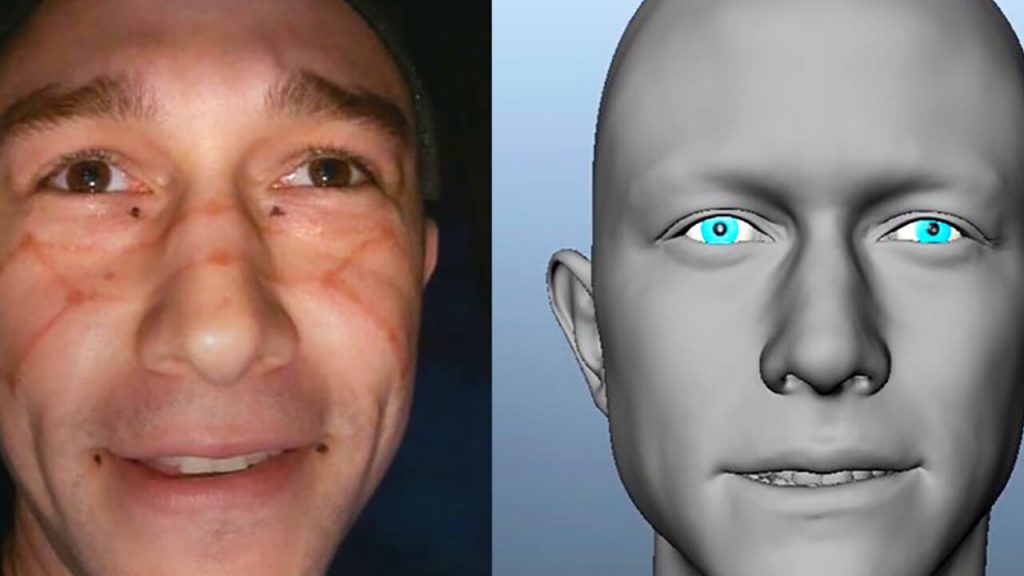

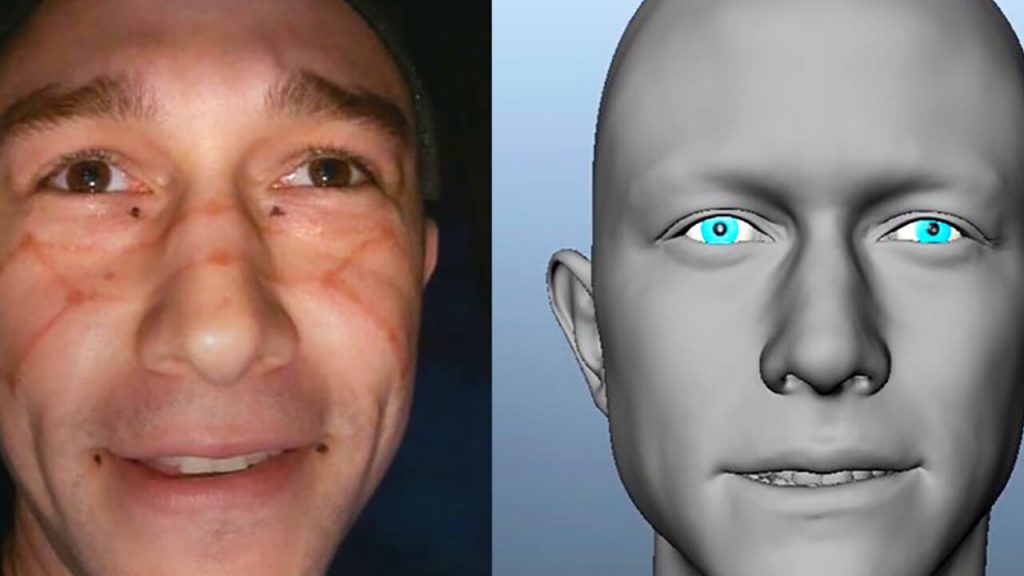

Analyzer is our standalone facial tracking software. Analyzer converts video of facial performances into proprietary motion data that can be applied to any character via our Autodesk Retargeter Plug In.

Professional facial tracking software

Features

- Analyze facial expression and movement from video

- Data can be used in most CG, VFX, or AAA production pipelines

- Easy-to-learn process with free training resources

- Create highly automated workflows for large volume demands

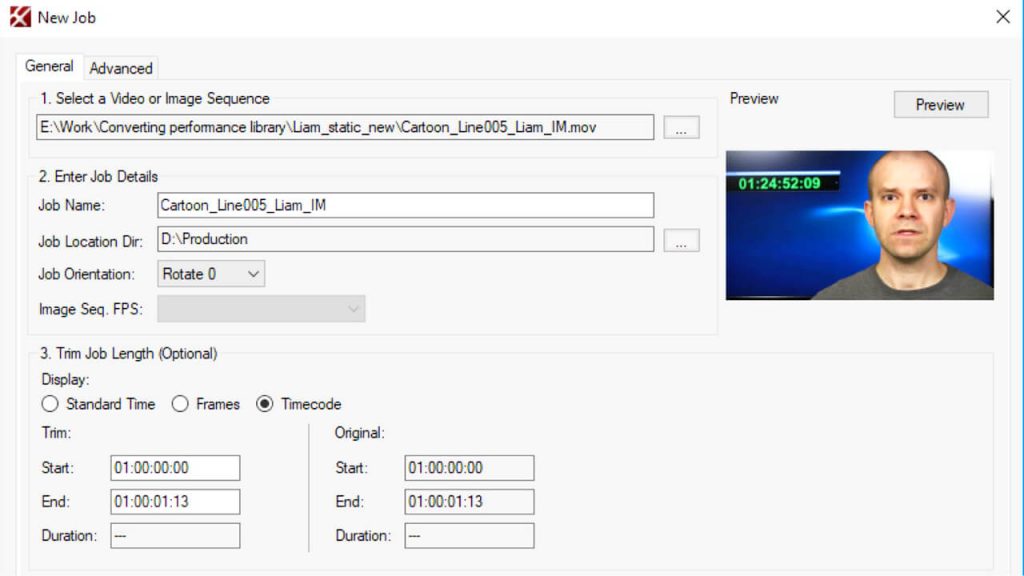

General Workflow for Analyzer 3.0

SETUP

A new “job” is the term for a tracking project in Analyzer. An Analyzer job consists of two separate things:

- An .fwt file (FaceWare Tracking) that serves as the main project file for your job. This is the file you’ll select when you wish to open an existing job.

- A folder that shares the same name as the job with “_fwt” added to the end. This folder contains many of the working files for your tracking job, including the image sequence that you are working from.

TRACKING

The way one gives the software information about the actor’s performance is to “markup” frames that have extreme and distinct expressions, creating training frames. These frames are then processed by the software to allow it to hit all of the in-between expressions. The user input is moving points (called “landmarks” in Analyzer) around the face in a specific way.

TRAINING

The main purpose of Faceware Analyzer is to track facial expressions quickly and accurately so those expressions can be applied to facial animation using Faceware Retargeter. Tracking lets the user create the shapes for a particular actor, eventually ending up with a model that reflects the actor’s expressions. This increases the accuracy of the track and does a better job at picking up the kinds of small movements that really help to sell a facial performance.

EXPORTING

Our export process is called “Parameterization.” This is the process through which tracking data in Analyzer is converted into a form that can be used to produce animation with Retargeter( a .fwr file). Parameterizing is the final step in the tracking process which combines the four processes of Parameterization.

Once parameterization is complete, Analyzer will have created a .fwr file in the same location as the .fwt file and project folder. If your video was 30 seconds long, your corresponding .fwr file will contain 30 seconds of motion data.

This .fwr is the performance file that will be loaded into Retargeter.

Features of Analyzer 3.0

Expression and texture tracking for complete facial motion analysis

Analyzer is a high-quality production software that tracks facial movement from video using machine learning and deep learning. It uses a markerless technology to track everything the face can do at an extremely high quality.

Robust toolset with intuitive workflow

Looking for the the premiere facial tracking software used by professionals around the world? This is it. Faceware products have been used on thousands of ground-breaking projects and we’re constantly making them better. Analyzer is simply the most powerful tool available for professional facial tracking.

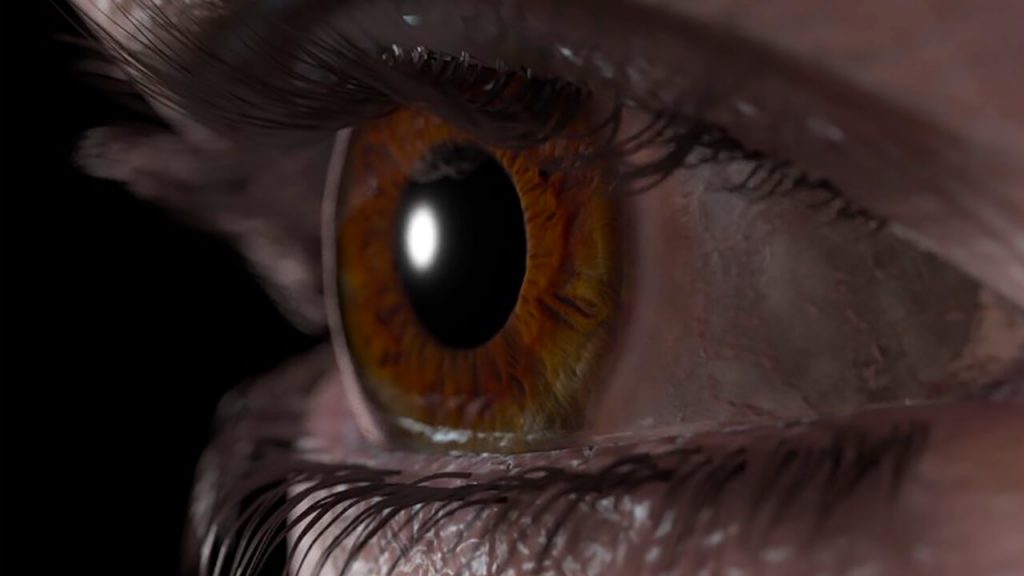

Eyes are the key to emotion and performance

Eyes bring out the emotion and believability of any performance. Our advanced pixel-tracking technology provides extremely accurate eye and gaze tracking, further reducing the barrier between your performance actor and their digital counterpart.

Patented & best in class technology

Faceware’s technology is built from Image Metrics consumer-grade technology meaning Analyzer can work on nearly any face in nearly any lighting condition. Enjoy exclusive features built from millions of facial performance images.

Distinguishing Features of Analyzer 3.0

PARAMETERIZATION

Parameterization is a process that extracts additional facial movement from our feature tracker by sampling every pixel for every frame of video that is analyzed. Without any additional user input, Parameterization allows hard to capture areas such as the upper cheeks, lower eyelids, and jaw position to be tracked with extreme accuracy.

GLOBAL TRACKING MODELS

Have a large amount of videos to process? Analyzer Studio Plus has features to export the statistical tracking data you create on your first video to to help track any additional videos with the same actor. This allows for significantly large-scale projects to benefit from our machine learning technology; particularly if there are a large number of shots with the same actor.

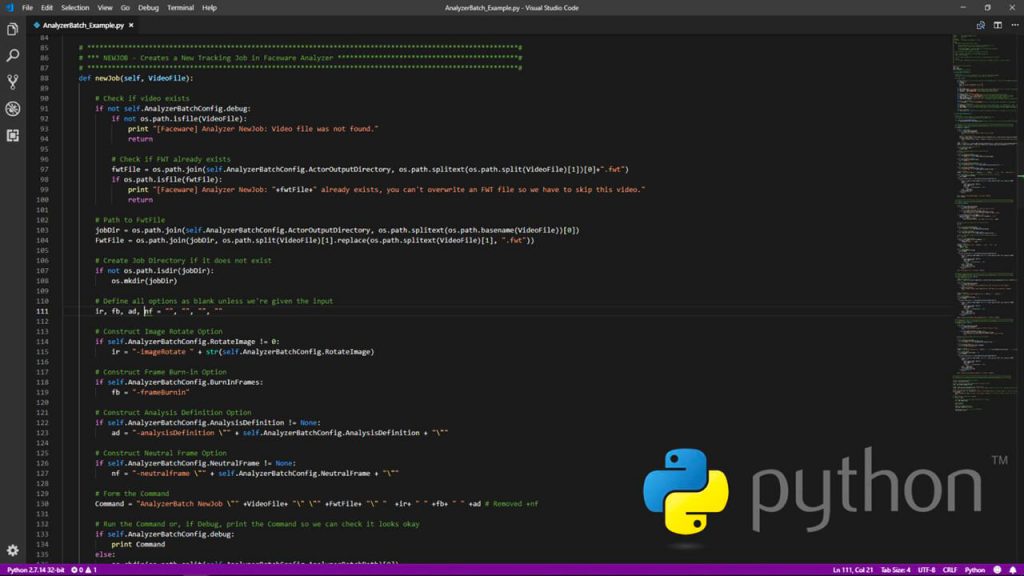

AUTOMATION API

Strapped for resources? Analyzer contains an entire library of batch API commands and arguments to access nearly any part of the Analyzer workflow. This library allows users to create semi and fully automated workflows. Our largest users process tens of thousands of seconds of facial animation without any user input on a shot-by-shot basis.

INTELLIGENT DRAG

We know a lot about tracking faces and are constantly looking at ways to improve our workflow. Creating tracking data is a breeze with a feature called Intelligent Drag. Once one landmark tracking point is correctly placed on the face, all other tracking points can be quickly placed on the face. Combined with a smart approach and Analyzer’s clean, simple workflow, you don’t need to be a scientist to get professional facial tracking.

(official website : Faceware Technologies, Inc. – Analyzer)

CREATION SUITE SOFTWARE

Retargeter

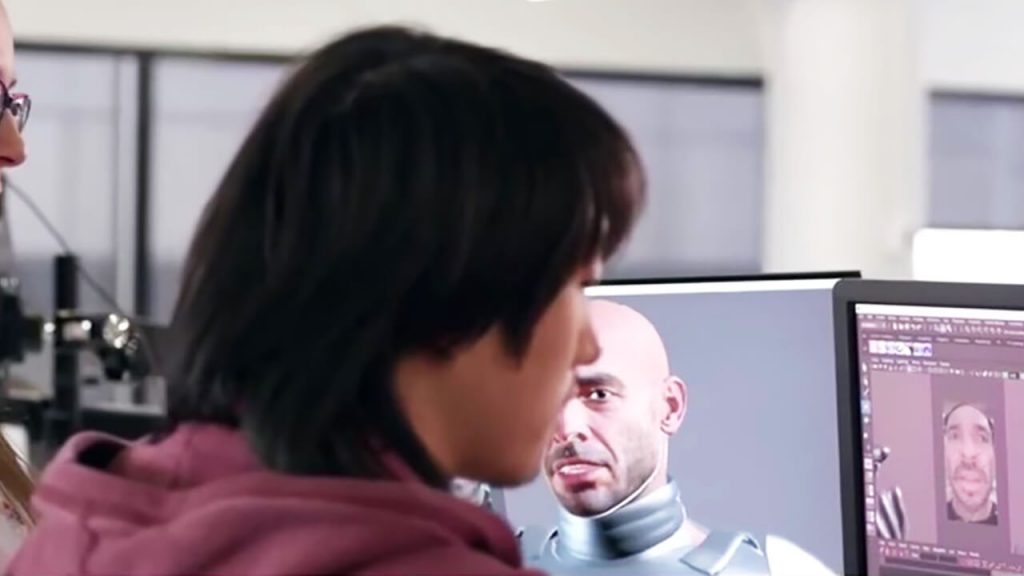

Retargeter is our animation and solving plug-in to common Autodesk animation products such as Maya, 3DS Max, and MotionBuilder. Retargeter takes the data from Analyzer and applies the motion to your characters through an intuitive and pose-based workflow.

Professional facial tracking software

Features

- Autodesk Maya, 3DS Max, and MotionBuilder Plug-In

- Apply motion data from Analyzer directly onto your rig

- Clean curve data that is easier for users to work with

- Python, Maxscript, and MEL commands create automated workflows

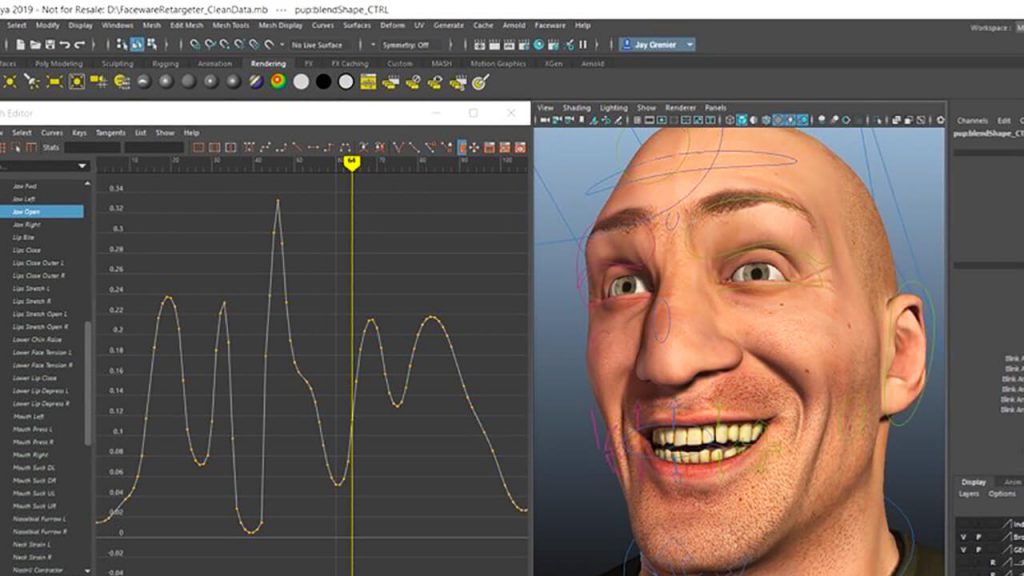

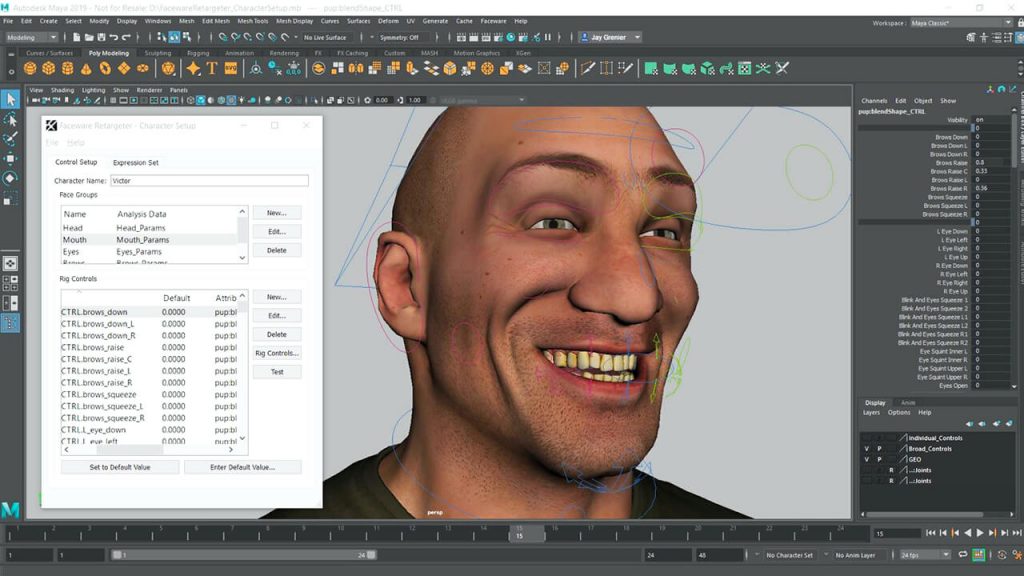

General Workflow for Retargeter 5.0

CHARACTER SETUP

Before you can begin creating animation you’ll need go through the quick and simple Character Setup process. This will be done once per character and typically takes between 10 and 30 minutes to complete. The result of this process is the character setup file, an XML file used when opening a performance in Retargeter.

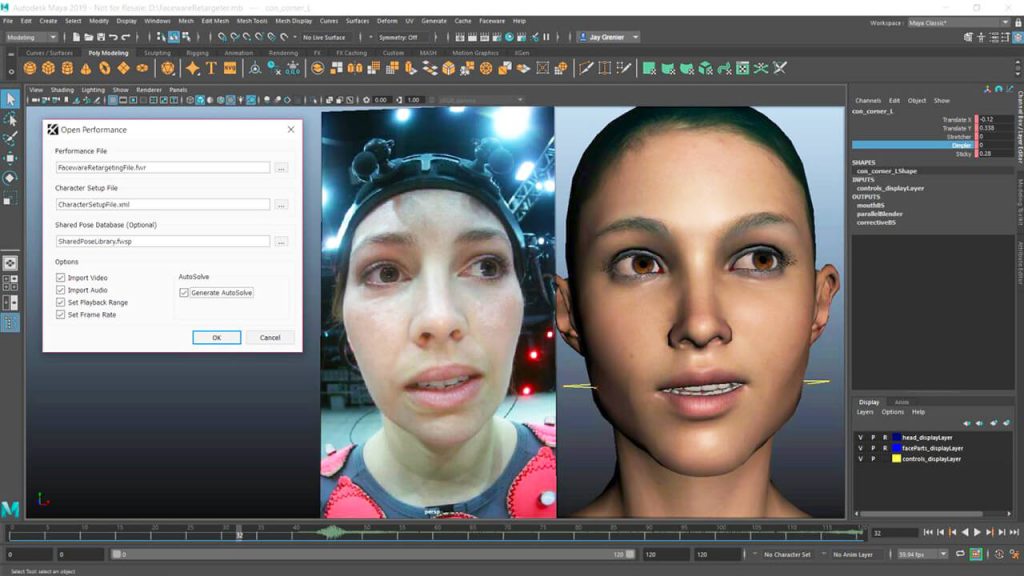

IMPORT PERFORMANCE

The subtleties and nuances of a facial performance can make or break an animation. For purposes of creating animation of the highest quality and for adding artistic decisions to the animation process, we have Retargeter.

The first step in retargeting a shot is to open a performance via the Performance menu. In the Open Performance dialog box, you will be asked to select several files:

Performance File (.fwr) – In the Performance File field, select the .fwr performance file that you generated during parameterization in Analyzer that you wish to animate from. This file contains the tracking data that Retargeter uses.

Character Setup File (.xml) – In the Character Setup field, select the character setup xml file that you created for this character during the Character Setup phase. For more information on Character Setup, please see the following article: Character Setup Walkthrough.

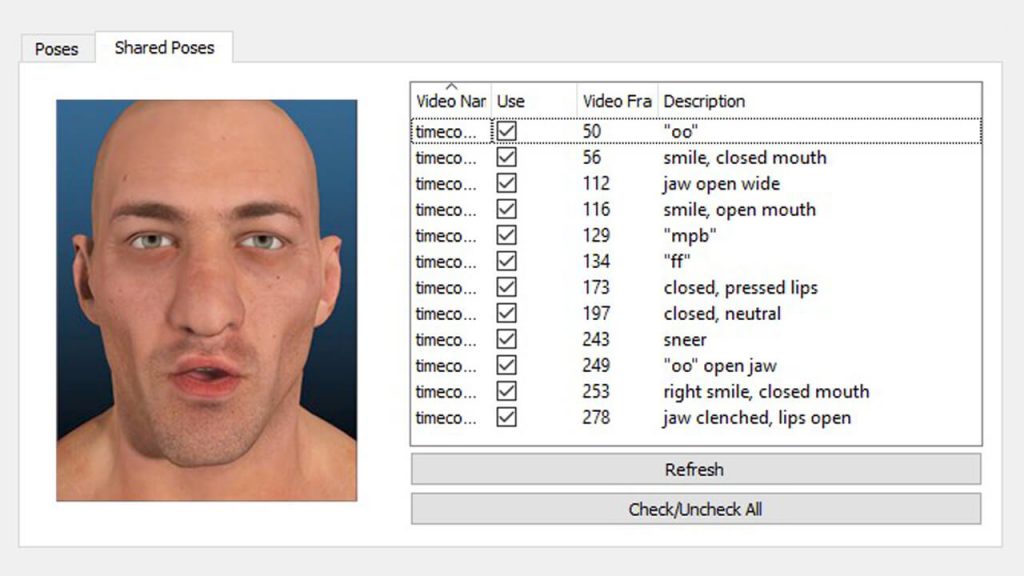

Shared Pose Database (Optional) (.fwsp) – The ‘Shared Pose Database File’ is an optional file that can be used to load “pre-made” poses for your character. This file is usually created by a supervisor and contains artistically approved poses for each of the character’s ‘Pose Groups’ (mouth, eyes, brows). Please see Shared Poses for more information.

From here you have a variety of options to choose from, including: Import Video, Import Audio, Set Playback Range, Set Frame Rate and Generate AutoSolve.

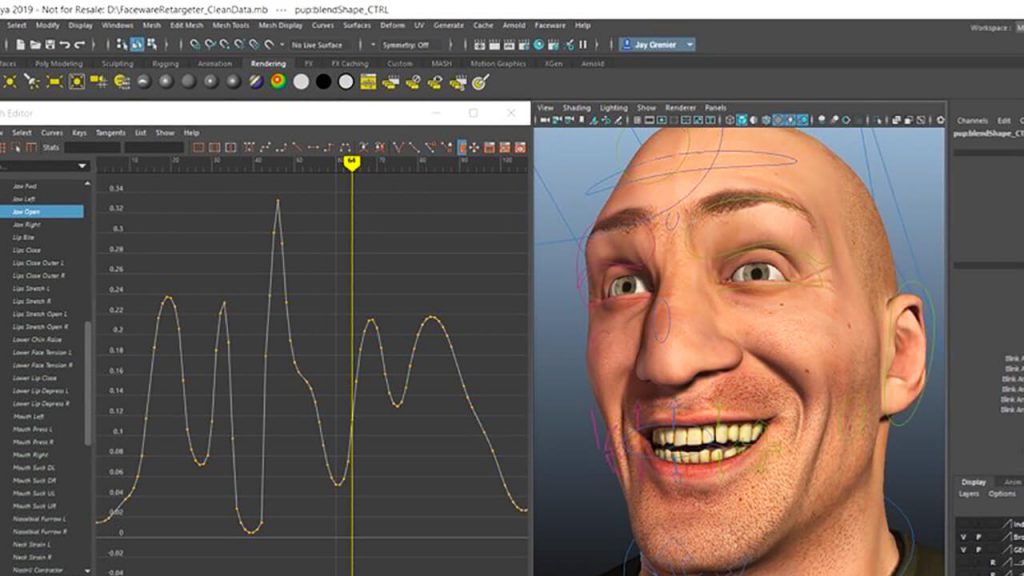

ANIMATE

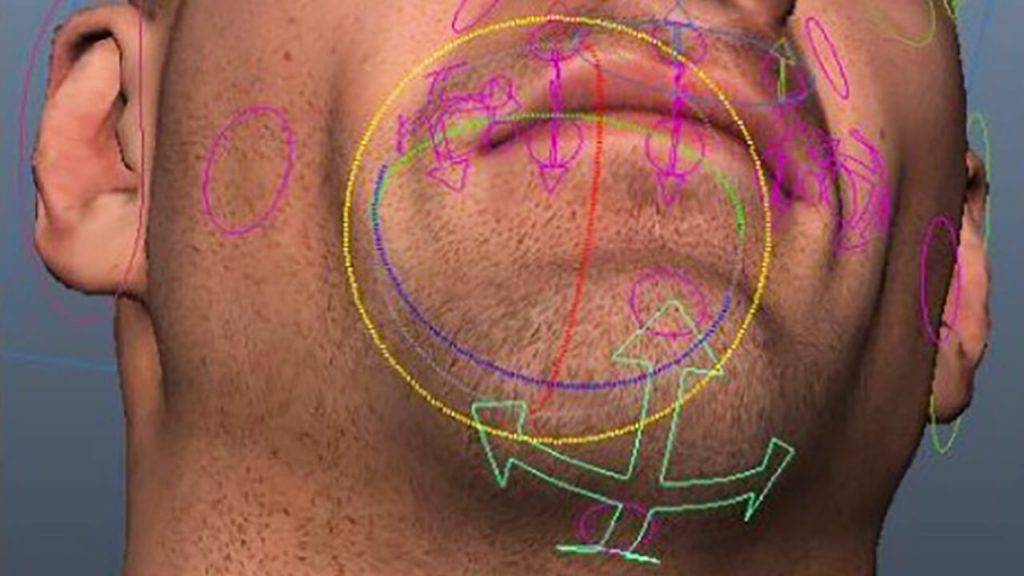

In Retargeter, the first section at the top shows you your Pose Groups. Pose groups are containers that hold the poses for each respective group. For example, the eyebrow poses would be placed in the eyebrow pose group, the mouth poses in the mouth group, and so forth.

These groups are based off of the ‘Rig Groups’ that have been defined for your character rig and each one has a number of the rig’s controls in it. These groups are typically set to: Mouth, Eyes, Brows, Head.

Poses are an essential part of animating with Retargeter. Much like creating Training Frames in Analyzer, the user decides where the most extreme and distinct facial shapes are occurring and poses the character appropriately on the given frame. These poses are then used in Retargeter’s calculation to apply animation to the entire shot, usually referred to as “retargeting.” If you’re unhappy with the results you can adjust your poses, add more, delete some, or make an infinite number of tweaks and check your updated results in near realtime by Retargeting/AutoSolving again.

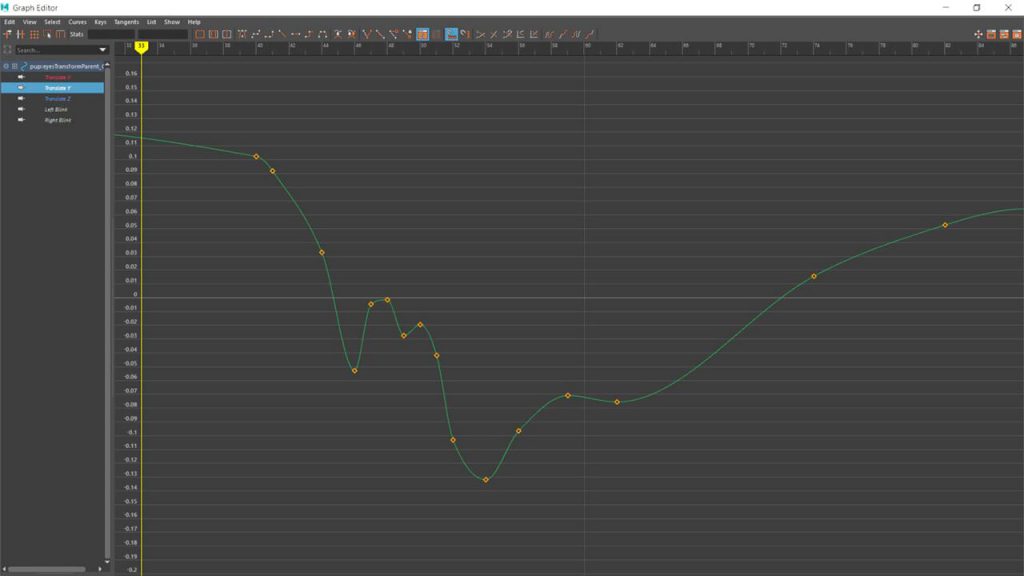

REFINEMENT

Retargeting is the process through which animation is generated on your rig. It uses the data from any created poses or from the AutoSolve calculations (assuming AutoSolve is on) to create keyframes on the controls that were added in Character Setup. From there, the user can manually edit the keyframes like with any animation or they can add/modify poses and retarget again to improve the animation broadly.

To retarget animation onto the rig:

- Once you are satisfied with the poses you have created, select the pose group you wish to retarget in the Pose Group window.

- Click the “Retarget” button on the bottom of the Retargeter plugin. Retargeter will then work for a few moments to create the keyframes.

- Scrub through the timeline or play the animation to view the results.

You can continue to add poses and retarget as many times as you like to get the desired animation. Feel free to delete poses if they are not in an ideal position or are hurting your results. Simply select the pose and hit the Delete button on the right side of the Retargeter plugin.

Features of Retargeter 5.0

Apply human emotion to any digital character

Retargeter is a high quality production software that uses machine learning and deep learning to retarget facial motion from a tracked video onto a 3D Character.

Setup and use any character rig

Say goodbye to complex rig requirements. The quality of your character is still extremely important but with Retargeter you have flexibility in how you build it. Retargeter can set keys on any keyable attribute; allowing you to work with bones, joints, blendshapes, morph targets, custom deformers, etc. Once you set these attributes in character setup, you are ready to create amazing, realistic animations.

The best retargeting features designed for animators

We believe that you shouldn’t need to be an expert in machine learning to create animation, our products are designed by professional animators working in real productions. Our tools are simple and easy to understand, yet fully featured and capable of performing extremely complex and believable facial animation.

Clean data that’s easy to work with

We know you need software that is easy to use, intuitive, and guaranteed to work every time. Retargeter’s features take the stress out of keyframe changes and polishing. Data is applied very quickly so iterations are fast and efficient. Intelligent keyframe reduction (pruning), animation smoothing, a master timing tool, frame ranges, and several other features make working with our data very easy.

FREE easy-to-learn resources to get up to speed quickly

Our support team is second to none. Check out our huge gallery of video tutorials and online knowledgebase to get you started and teach you best practices for animating in Retargeter. You can also work directly with our Support Team to get the best results.

Distinguishing Features of Retargeter 5.0

POSE-BASED WORKFLOW

Poses are an essential part of animating with Retargeter. Much like creating Training Frames in Analyzer, the user decides where the most extreme and distinct facial shapes are occurring and poses the character appropriately on the given frame. These poses are then used in Retargeter’s calculation to apply animation to the entire shot. If you’re unhappy with the results you can adjust your poses, add more, delete some, or make an infinite number of tweaks and check your updated results in near realtime.

POSE SUGGESTION

The first and most useful option for creating poses is using the Auto-Pose feature. This feature makes suggestions as to what video frame the user should create poses on based on Retargeter examining the tracking data from Analyzer and figuring out where the most significant shapes are occurring. It acts as a guide, telling the user the ideal places to create poses.

SHARED POSE LIBRARIES

Have several animators working on one character? Utilizing the Shared Pose Database functionality of Retargeter, you can store any pose you create for use with future shots, or for use with other animators. This saves time because, instead of creating new poses every time you animate a shot, you can import the already created poses, Retargeter from the Shared Pose database directly or have the Auto Pose feature use your shared pose library to automatically pose your character on the suggested frame.

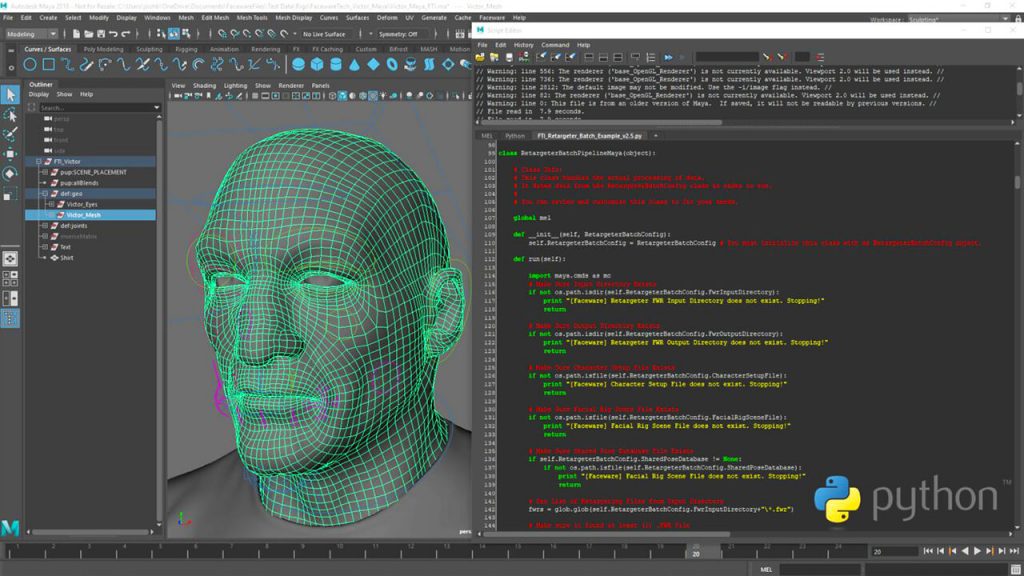

AUTOMATION API

I’ve got a metric ass-ton of animation to create. Since Retargeter is a plugin to the Autodesk 3D animation apps, batch scripting capabilities are based in running commands in the native scripting language of the host application. In Maya the batch scripting commands can be run in MEL or Python, in 3DS Max they can be run in Maxscript. Create rapid workflows for semi or fully-automated results.

(official website : Faceware Technologies, Inc. – Retargeter)

CREATION SUITE SOFTWARE

Realtime for iClone

Reallusion’s partnership with Faceware enables iClone 7 to achieve real-time facial motion capture and recording. This will empower indies and studios of all levels, giving them access to facial motion capture tools that are fast, accurate and markerless—all from a PC webcam.

Markerless facial capture for real-time animation

Features

- Calibrate in One-Second

- Use any PC Camera to create animation in iClone

- Easily edit-and-polish your data after capture

- Compatible with industry standard characters

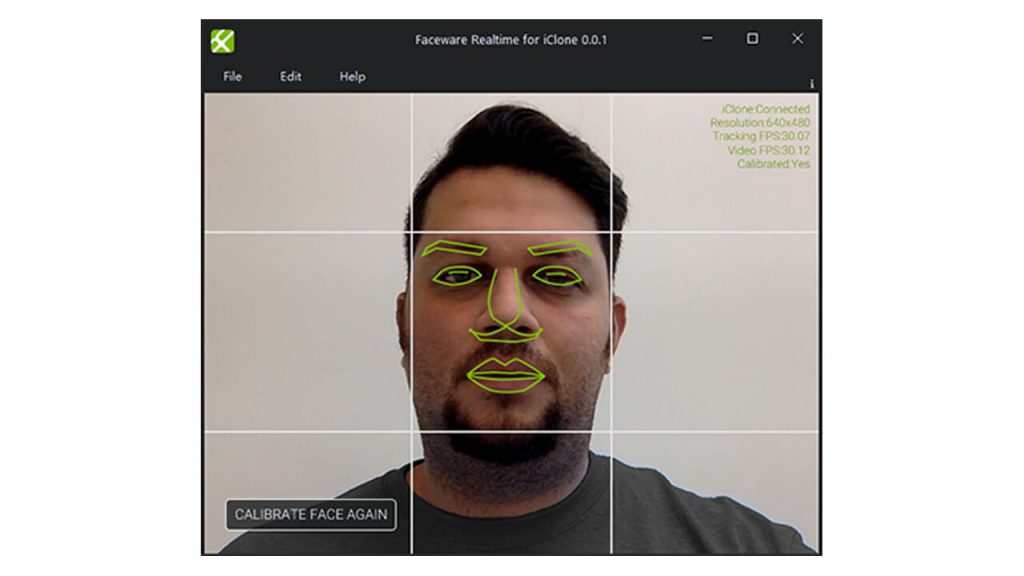

Fast, Accurate, Markerless Facial Tracking

With Realtime for iClone and the iClone Motion LIVE Plug-in for Faceware, you can now create, record, and edit facial animation in iClone 7 extremely easily. Capture your facial expressions using a web cam, GoPro, Mark IV Headcam, or any standard PC camera. Alternatively, you can use an image sequence for finer control over the performance.

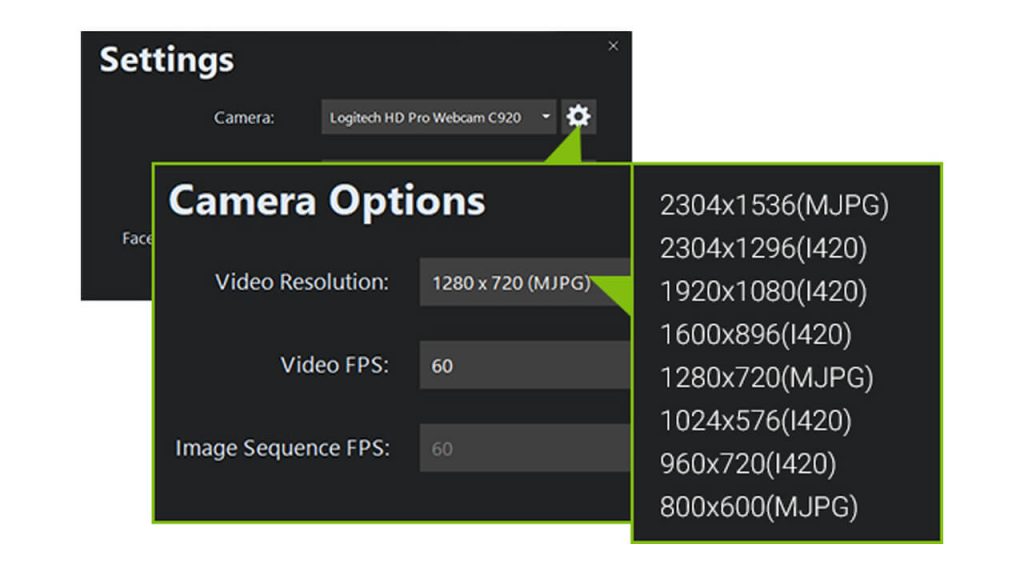

Easily Adjust Camera Settings For Optimal Performance

Realtime for iClone gives you access to any camera connected to your PC. Once a camera is selected, you can easily adjust settings such as video resolution and FPS to get the optimal performance from both your camera and the software. We recommend capturing your face at a minimum of 30 FPS (frames per second) though 60 FPS is ideal! You’ll get higher fidelity lip sync animation at a higher FPS. We also recommend keeping the resolution at or below 720p for optimal use. Many people will find that the 640×480 resolution is more than enough.

Fast and Simple Calibration

Calibrating the technology to your face is an important part of how Realtime for iClone works. Calibration establishes a baseline by ‘teaching’ the technology what your face looks like in a neutral pose. Unlike other tracking software, calibration in Realtime for iClone is fast and simple, allowing you to train the technology in a single button press and adjust on-the-fly for optimal results. In the video tutorials created by Reallusion, you can see how Calibrating in slightly different poses might give you enhanced results in iClone.

Compatible with Industry Standard 3D Characters

iClone 7 Characters made by Character Creator has updated with 60 face morphs, fully optimized for Faceware tracking data. For Daz Genesis, characters generation 1, 2, 3, and 8, auto-conversion tools and 60 face morph DUF profiles are provided.

Distinguishing Features of Realtime for iClone

TRACKING INSPECTOR

Instant, dynamic feedback. These meters let you view your animation data in real time. Observe the relationship between your facial performance and the real-time data stream and adjust the result.

CUSTOM CAPTURE PROFILES

Define your own expression style with facial muscle sliders and save it as a Custom Capture Profile. Default 60-morph capture profiles are optimized for StaticCam and HeadCam tracking models. You can further take advantage of the Search function to quickly access sliders!

FEATURE-BASED FILTERS

Globally or separately control the signal input strength for brows, eyelids, eyeballs, mouth, jaw, cheek, and head rotation. Easily capture stylized characters with proper strength settings for toning down or exaggerating features. Save settings for characters.

FACE KEY TIMELINE

Edit face capture performances in post with the iClone facial timeline. Access the motion clip track to edit & offset with face keys. Expression Intensity control through slider bars.

(official website : Faceware Technologies, Inc. – iClone)

PERFORMANCE CAPTURE SOFTWARE

Shepherd

Shepherd combines all the integral features a face capture operator needs in one innovative and thoughtful app. Sync your entire performance capture workflow with timecode support and additional functionality to lync your Vicon, Xsens or Optitrack system to trigger your facial systems.

The facial motion capture command center

Features

- Mark IV Headcam operator software for AJA KiPro devices

- Simplicity on-set for more performance focused management

- One streamlined operator-friendly interface for all performers

- Full timecode support with triggers supported from Vicon, Xsens, and Optitrack Systems

General Workflow for Shepherd 1.0

SETUP: ADDING KIPRO DEVICES

Faceware Shepherd is a software application built to help you easily sync, configure, and manage multiple recording decks at the same time. Shepherd solves the issue of a single operator having to efficiently manage more than one recording device, often a big problem on more complex shoots.

Shepherd’s whole purpose in life is to simplify the management of multiple recording devices. The first step in this process is introducing Shepherd to your recording equipment. This can be done in several ways:

- By clicking the ADD KI PRO DEVICE button from the main UI.

- Using the Add Network Devices Shortcut (CTRL+D).

- From the menu bar: Manage>Find/Add Ki Pro Device(s).

LYNC TO MOCAP SYSTEMS

When you add a Mocap System to Shepherd and turn the Mocap Lync toggle to Enabled, you are now in Mocap Lync Recording mode. In Mocap Lync Recording Mode, you can trigger recording of face capture from your body mocap system. Mocap Lync requires your body mocap software to be set up to broadcast Start/Stop Recording triggers and Capture Names. These triggers are XML messages transmitted via UDP across your LAN. When Mocap Lync is enabled, Shepherd will listen for these messages from your body mocap software to automatically Start/Stop Recording and keep your body and facial mocap Capture Names consistent. Mocap Systems that support Lync feature:

- Vicon Shogun

- Vicon Blade

- Optitrack Motive

- Xsens MVN Animate Pro

Shepherd’s whole purpose in life is to simplify the management of multiple recording devices. The first step in this process is introducing Shepherd to your recording equipment.

RECORD PERFORMANCES

Shepherd provides two ways of recording: Mocap Lync Recording Mode and Manual Recording Mode.

When there is no Mocap System selected (or the Mocap Lync toggle is Disabled), Shepherd is in Manual Recording Mode. Shepherd will ignore any Start/Stop recording triggers from Mocap Systems. In this mode, you control recording manually by inputting the Clip Name/Take Number and clicking the Recordin button via your Transport Controls. In this mode you also control playback.

When you add a Mocap System to Shepherd and turn the Mocap Lync toggle to Enabled, you are now in Mocap Lync Recording mode. Shepherd will prioritize the user interface for listening for Start/Stop recording triggers from your selected Mocap System. Elements like the Clip Name/Take Number fields, Transport Controls, and Clip playback are disabled in this mode.

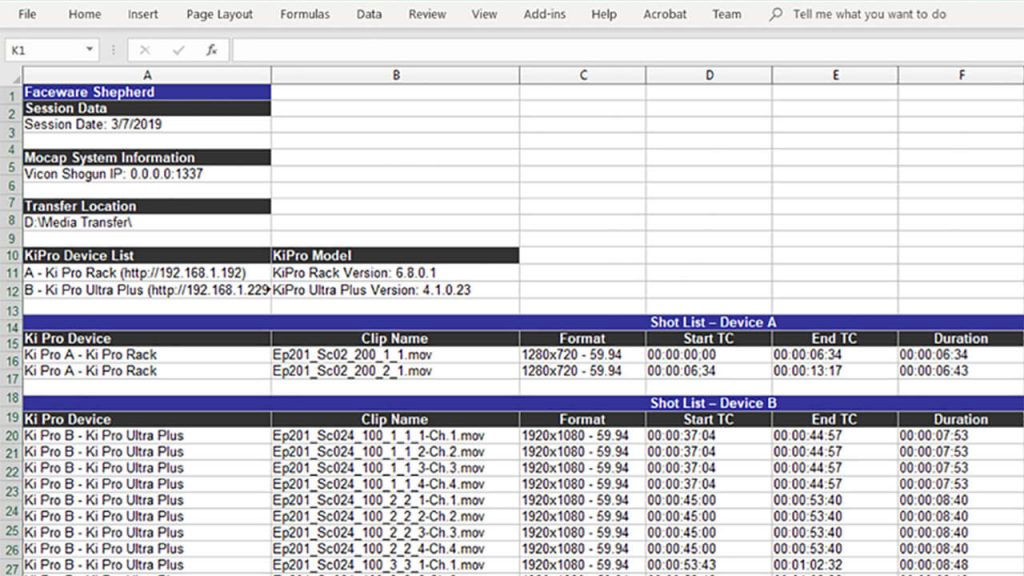

TRANSFER & ORGANIZE MEDIA

Shepherd provides multiple ways to transfer and organize your recordings. The File menu contains an option to Export Session Details, which lets you create a JSON or XLXS file that contains the details of your current session, like: Mocap System Name, Connected Ki Pro Devices, and the contents of your playlist with each Clip’s relevant info. You can also Export Session Details when you Transfer All Clips via the Manage menu. Or, you can use the Playlist clip context menu to pick and choose which clips you want to download. We know that one size does not always fit all. And with Shepherd, you can find the workflow that works best for you.

Features of Shepherd 1.0

Streamlined operator interface

Shepherd combines all the integral features a face capture operator needs in one innovative and thoughtful app. Giving you more time to focus on the things that really matter – like camera framing, talent performance and capture accuracy. With less to micromanage, Shepherd gives you the freedom to work faster and more effectively.

Body mocap integrations

Integrating with the industry’s leading Mocap Systems, Shepherd allows your body and face capture systems to be combined into one streamlined system. Now you can simultaneously trigger face capture with body capture, and ensure the continuity of capture names. Shepherd creates a more unified, succinct and automated motion capture process.

Capture multiple face systems with ease

An unlimited number of face recording devices can be added to Shepherd (including your witness cameras), giving you one modern interface to control recording, playback, see device info and health, timecode input, and view clips across all of your devices. Save both time and money while Shepherd works hard, and you work smart.

Media transfer and producer tools

With Shepherd, there’s no need to pull the hard drive from your digital recording device or access the built-in AJA web interface to transfer recorded clips. With a couple of clicks, you can transfer a single clip, multiple clips, or all the clips across all of your devices remotely. You can even create a handy document detailing clip information that can be used for review, editorial, or to support an existing Faceware Batch Pipeline.

Distinguishing Features of Shepherd 1.0

MOCAP SYSTEM LYNC

Shepherd’s Mocap System Lync puts your body mocap system in control of Shepherd’s face capture system. Lync unifies your Vicon, Xsens or Optitrack System with your Faceware Headcams enabling unprecedented control of your mocap shoot. This is the mocap feature you didn’t know you were waiting for.

SMART FUNCTIONALITY

By combining the various functions associated with facial motion capture and centralizing them into a single application, Shepherd integrates the data and options you need most in one program. Including:

- Add/Remove Devices

- Arm/Disarm Decks

- Playlist Grouping

- Playlist Sorting

- Device List Reorganization

- One-Click Network Device Detection

- Device Renaming

- Displayed Input Settings

- Playback Options

- Hard Drive Storage Alerts

- Device Status

- Multi-Channel Changer (Ki Pro Ultra Plus Only)

- and more …

With everything you need up front and center, Shepherd allows you to work smarter instead of harder.

CUSTOM CHANNEL IDENTIFIERS

Exclusive for the Ki Pro Ultra Plus, Shepherd now offers Custom Channel Identifiers for all your multi-channel recording needs. Meant for teams who utilize Ki Pro Ultra Plus multi-channel settings, Channel Identifiers allows you to append your own unique attribute schema to any series of takes for easier identification and customization.

TABLET INTEGRATION

Shepherd runs on Windows 10 devices, including tablets. Now you have the flexibility to control your face capture shoot literally in the palm of your hands. Untether yourself from your workstation and take charge on your own terms.

(official website : Faceware Technologies, Inc. – Shepherd)